Background

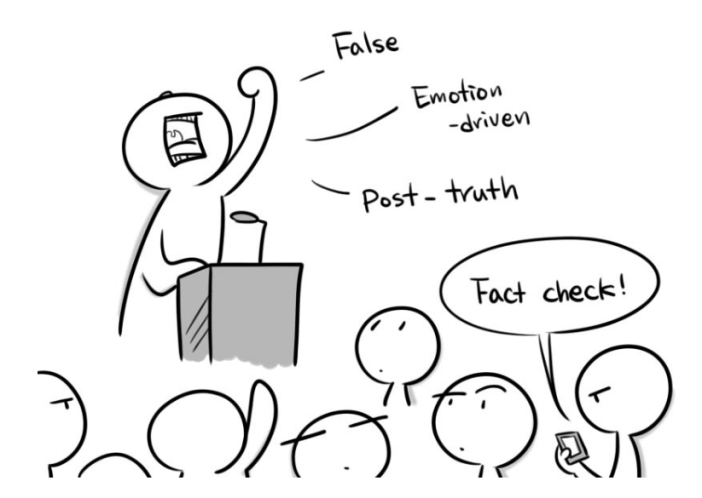

Our original problem was combatting populism in the media, and how this populism is amplified by shares and conversation in social media. Populism is a difficult phenomenon to define, as it is a big concept with many variables. We resolved to focus on the recent development of “post-truth” politics: facts being ignored in politics in favor of emotional appeals.

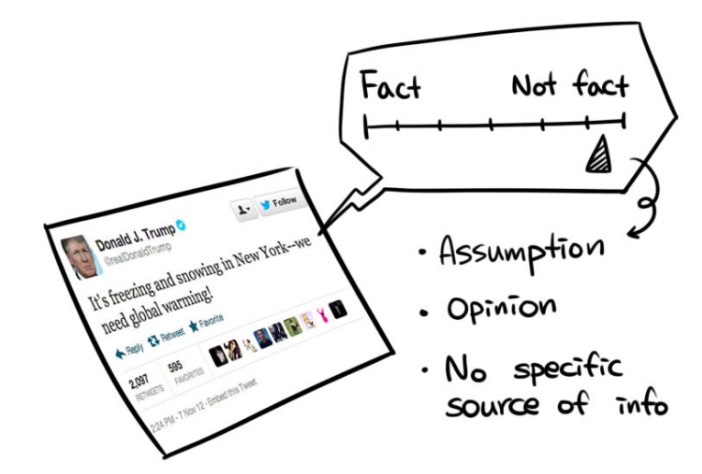

The political climate has been tumultuous in the passing year, with upsets like Brexit and the unexpected election of Trump rocking the political and economical climates. Both campaigns were driven by largely non-factual information: PolitiFact (a politics fact-checking website) determined that 70 percent of Trump’s “factual” statements during his campaign fell into the categories of “mostly false”, “false” and “pants on fire” untruths. (Politifact, 2016) The Brexit campaign infamously advertised being able to save £350 million for the National Health Service by leaving the EU, which turned out to be a non-factual statement. (Helm, 2016) Publications like The New York Times and The Guardian have written think-pieces on the issue, which indicates that the phenomenon is increasingly being brought to the public’s attention. (Davies, 2016) (Viner, 2016)

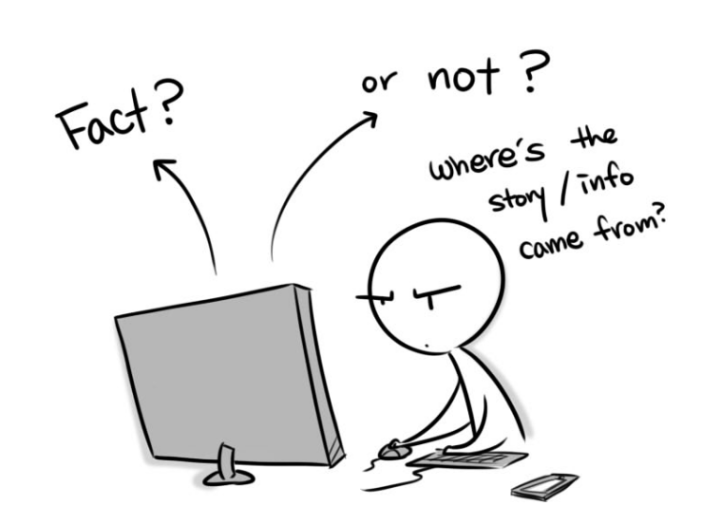

Reading articles on lying politicians has limited influence on individuals’ lives. People can of course be more aware, but that may not shield them effectively from the bombardment of emotional appeals. We want to create a service that helps people dissect the messages being targeted to them in real-time.

One of the most vulnerable services we identified is Twitter. As Twitter is a fast-paced information channel, misinformation can often be left unnoticed by the user. Politicians are increasingly using this media to contact their voters with emotional appeals (Trump is infamous for his Twitter rants (Shabad, 2016)). We want to create a service that helps users fact-check tweets by politicians real-time.

Another problem we identified is how to remain impartial. Media houses are largely regarded as partisan to either republicans or democrats. We want to seek the truth in a non-partisan manner. Wikipedia rose to our minds as the ultimate non-partisan service, edited and fact-checked by people everywhere. We decided that we would seek to emulate Wikipedia’s model: bringing facts to the people, from the people.

Currently the problem is being solved by users by Googling. Sites such as PolitiFact and FactCheck.org can be used to check statements made by politicians. This, however, requires dedication and time from the user. We want to remove the required time and dedication investment, and make fact-checking instant. Our end users can download a plugin onto their Twitter application, that will update in real time. Users from all over the world will be able to fact-check tweets, and give them a truth-rating. This will give the end user and instant answer to whether the tweet they are viewing is reliable. This will require moderators, to keep the information non-partisan.

The key parties in our solution are the users (both contributors and non-contributors), Twitter (we will use their API), and of course the politicians. Additionally, the contributors will be using 3rd party media resources to fact-check the tweets. Perhaps we could cooperate with PolitiFact and FactCheck.org, to bring their expertise to Twitter.

In the short-run we hope to affect the users. They will receive more reliable information on whether the information the politicians are supplying is true. This will hopefully lead to more informed voting behaviour, and more decisions based on actual facts.

In the long-run we could potentially affect the behaviour of politicians. If they begin to get called out on the non-truths they are speaking, perhaps non-factual emotional appeals will not pay off anymore, and the world will have more reliable politicians.

In the following sections we will go through the concept, value, societal goals, and limitations in more detail.

Our Concept

What

Adds-on for web (Windows, MacOS), with

- Fact-checking functions, associate with social media (Twitter)

- User-generated community (wiki, discussion board)

Who its for

Internet, or mobile users, who are

- For those who wants to tackle more into the truth, but not sure where to start

- Users who wants easy access to the source of information

- Users who wish to contribute and/or spread out the facts

How it works

- Install adds-on on web browser

- Click ‘Fact-Check’ button

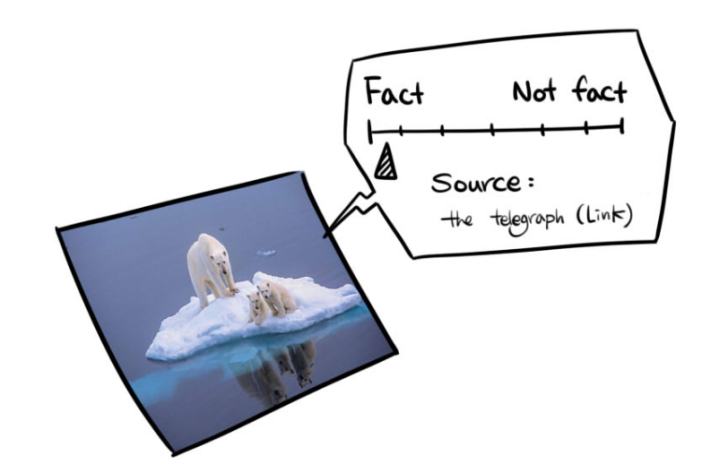

- See visual indicators (Green: close to fact / Red: close to false information or rumors)

- Click ‘Raising Voice’ and send feedbacks and/or contribute new information to wiki

*Algorism based, providing links

*But aims to become more towards user-generated, as more and more people contributes

What are other similar concepts

- Fact checker from one of Junction 2016 project

- http://www.pcworld.com/article/3143425/internet/facebook-working-with-fact-checkers-to-weed-out-fake-news.html

- http://nordic.businessinsider.com/students-solve-facebooks-fake-news-problem-in-36-hours-2016-11?utm_content=bufferd1b17&utm_medium=social&utm_source=facebook.com&utm_campaign=buffer-ti&r=US&IR=T

How do we differentiate

- Focus more on user-generated informations and discussions (Collaborative intelligence)

- Support other social media

- Encourage user participation by providing features and events such as ‘this week’s fact-finder’, ‘cracking the urban legend’ etc.

- Provide intuitive user interfaces

Diagram

https://docs.google.com/drawings/d/17VYVb47W5fKu1K-N3S2x0MELSBsIsO269eTL7Y0kAAo/edit

(UI mockups coming for final draft)

Value Proposition: Know What to Question

We identified our main value customer segment as Twitter users who follow politicians. These users’ Twitter feeds are flooded with tweets that influence their decision making, political views and general understanding of world events. Social media, and Twitter in particular, has created an arena for political figures to enforce their political views with fast, short, messages which are not always fact-checked by their readers. The user faces a problem of too much information and too little time to do fact-checking.

But knowing if a tweet is true or not is essential for the user to gain a fact-based, rational and objective world view, and e.g. to be able to vote for the most suited candidate in elections. A Twitter user who follows political figures would gain a lot by knowing if a Tweet’s is incredible, and should be questioned.

Our product allows a Twitter user to know what to question, by displaying a small indicator of the tweets ‘truth-value’. The truth-value is formed collectively by the community with simply upvoting or downvoting the tweet. The user gains a fast visual clue of what how the community rates a particular tweet, which immediately tells the user what other people think about the truthfulness of the tweet. This also helps lessen and moderate the flood of questionable tweets.

There is value also to other parties (like politicians, governments, and big media houses, and citizens who do not use Twitter), in the long and short run. These will be examined in the final draft.

Societal goals

Our ultimate vision is to have a society that thinks and questions everything it encounters, which helps it make better decisions. In the short run, we hope to achieve that by encouraging youngsters on social media to check the tweets’ credibility and make healthier decision whether to follow the source of tweets or not. In the long run, however, we want to include other social platforms, which are becoming a vital source of news.

The people that we are mainly targeting are those who belong to each of the millennial generation and generation Z. They are the young adult social media users who become interested in politics, namely aged between 17 and 35.

Once people start having no tolerance for emotion-based statements on social media, we know we have done a good job. Nonetheless, “good” is hard to quantify, hence we consider a larger user-base to be a good indicator. For instance, the ratio of the people who use the service to those who vote in a campaign can quantify if the service is useful. Still, the number of users does not necessarily mean a factual tool, which is discussed in detail in the next section

We hope to create a ripple effect wherein the effect starts from the people who stop buying into post-truth tweets. This would cause a decrease in the popularity of populistic politicians, which in turn affect the politicians, who would no more rely on post-truth-characterized statements to gain acceptance, if they get elected. This also creates a healthier competition between different political parties, solely based on factual arguments.

Societal Limitations

Our solution, of course, subject to a certain amount of limitations. Vital to our solution is to achieve a sufficient user-base, since the content is going to be user-generated. As there already is a wide range of different kinds of plugins and extra features on the market, it might be hard to stand out and gain on trust among the potential users. In order to separate our product from the others, and above all from the fake ones, we must devote to marketing and emphasize that our company remains impartial and the service content is fully user-generated. Additionally, the literature on designing information technology proposes that to achieve the trust of customers, the result is technology that accounts for human values in a principled and comprehensive manner throughout the design process, since people trust rather on other people, not the technology (Friedman & al., 2000). This means, we must operate transparently, our aim must be clear to everyone.

Our specific target group are the young adults who are attuned to use social media and are aware of politics. They are a relatively easy group to reach, since they are talented computer users and they are able to question the content on the internet. Moreover, they might be seeking solutions to separate trustworthy sources already.

How about those people, who don’t know how to even use the internet and social media in a proper way, like elderly? They could well be interested in the correctness of the news they read, but firstly they are not necessarily aware of the need of questioning the news, and secondly if the are aware, they don’t know what to do about it. This group might be hard or even impossible to reach. Then there are people who don’t follow politics or simply don’t care about the post-truth tweets. However, people who don’t follow politics or don’t care about it yet, might become interested in our plugin, if there would be enough hype around the theme of populism and our product would have an established position as a reliable wiki plugin.

Our goals are to affect the behaviour of politicians, by making them to realize that it isn’t worthwhile to use emotional appeals and secondly, have a society that questions things and makes better decisions based on facts. But how far can we really get with the plugin? How much can our solution really change? What if the effect is the contrary, people stop questioning the things they encounter and only trust on technical solutions like plugins.

Sources

PolitiFact. (2016). “Donald Trump’s File.” Available at: http://www.politifact.com/personalities/donald-trump/ [Accessed 23.11.2016]

Helm, T. (10th September, 2016). “Brexit camp abandons £350m-a-week NHS funding pledge”. The Guardian. Available at: https://www.theguardian.com/politics/2016/sep/10/brexit-camp-abandons-350-million-pound-nhs-pledge [Accessed 23.11.2016]

Davies, W. (24th August, 2016). “The Age of Post-Truth Politics”. The New York Times. Available at: http://www.nytimes.com/2016/08/24/opinion/campaign-stops/the-age-of-post-truth-politics.html [Accessed 23.11.2016]

Friedman, B., Kjan, P. H. & Howe, D. O. (2000) Trust Online. Communications of the ACM. Vol.43(12), p.34-40.

Viner, K. (12th July, 2016). “How technology disrupted the truth”. The Guardian. Available at: https://www.theguardian.com/media/2016/jul/12/how-technology-disrupted-the-truth [Accessed 23.11.2016]

Shabad, R. (22nd November, 2016). “Trump meeting with New York Times back on after Trump Twitter rant”. CBS New. Available at: http://www.cbsnews.com/news/donald-trump-twitter-rant-new-york-times-cancels-meeting/ [Accessed 23.11.2016]

One event that supports this assumption is Brexit. The leave campaign was heavily populist, with people being pushed to vote leave, without fully understanding the implications of it (farmers losing important subsidies [1] , the difficulty of emigrating, etc.). People’s emotions were appealed to, and decisions were not based on facts. Many people expressed their regret with their vote after the fact. An estimated 1.2 million Leave voters regret their choice [2].

One event that supports this assumption is Brexit. The leave campaign was heavily populist, with people being pushed to vote leave, without fully understanding the implications of it (farmers losing important subsidies [1] , the difficulty of emigrating, etc.). People’s emotions were appealed to, and decisions were not based on facts. Many people expressed their regret with their vote after the fact. An estimated 1.2 million Leave voters regret their choice [2]. [6]

[6]